Last week, we examined the accuracy of several presidential forecasts. For those familiar with statistics and probability theory, the results proved unsurprising: the forecasts came reasonably close to the state-level outcomes, but the average forecast outperformed them all.

Put another way, the aggregate of aggregates performed better than the sum of its parts.

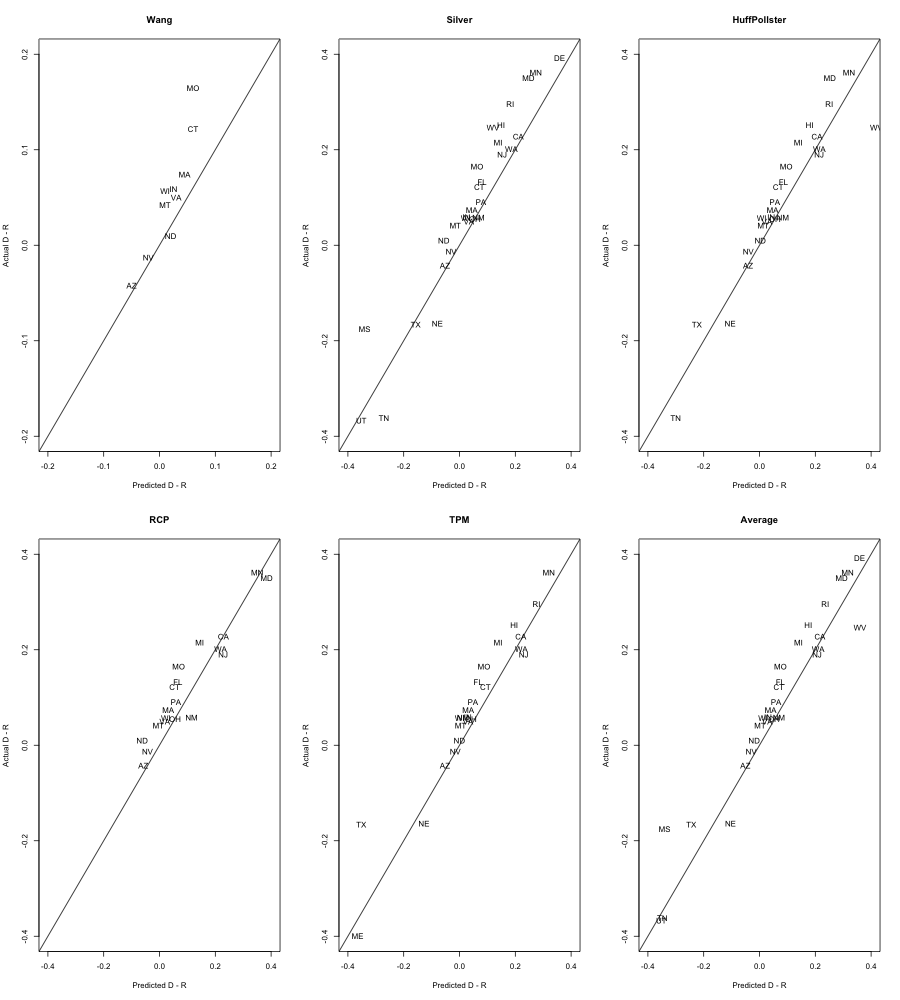

This year’s Senate races provide us another opportunity to test our theory. Today, I gathered the Senate forecasts from several prognosticators and compared them to the most recent Election Day returns. As before, I also computed the RMSE (root mean squared error) to capture how accurate each forecaster was on average.

We must note one modest complication: not all forecasters posited a point-estimate for every Senate race. Nate Silver put forward a prediction for every race; but Sam Wang of Princeton University only released 10 predictions for competitive races.

We accordingly compute two different RMSEs. The first, RMSE-Tossups, only computes the RMSE for those races for which each forecaster put forward a prediction. (There are nine races that fall into this category: Arizona, Connecticut, Massachusetts, Missouri, Montana, Nevada, North Dakota, Virginia and Wisconsin.)

The other calculation, RMSE-Total, shows each forecaster’s RMSE over all predictions. Wang, for example, is evaluated by his accuracy on the ten predictions he made; while Silver is evaluated on all 33 races.

| Forecast | RMSE-Tossups | RMSE-Total |

| Wang | 4.7 | 4.6 |

| Silver | 5.1 | 8.0 |

| Pollster | 3.8 | 5.8 |

| RealClearPolitics | 5.4 | 5.1 |

| TalkingPointsMemo | 3.9 | 8.0 |

| Average Forecast | 4.4 | 5.4 |

The numbers in the above table give us a sense of how accurate each forecast was. The bigger the number, the larger the error. So what can we learn?

Alas! The average performs admirably yet again. It’s not perfect, of course; for some races, there are precious few forecasts to average over: Delaware, for instance, has only the 538 prediction.

To begin accounting for this, we weight the RMSE by the share of forecasts used to compute the average. If we limit our evaluation of the average to only those races with three or more available forecasts, the RMSE drops to 4.8.

What else emerges from the table? For one, the poll-only forecasts — especially the Wang, RCP and Pollster forecasts — perform better than Nate’s mélange of state polls and economic fundamentals.

North Dakota, where Democrat Heidi Heitkamp bested Republican Rick Berg, provides a case in point. Pollster and RealClearPolitics both predicted a narrow win for Ms. Heitkamp. The 538 model considered the same polls upon which Pollster and RCP based their predictions; but the fundamentals in Mr. Silver’s model overwhelmed the polls. As a result, the 538 model predicted that Mr. Berg would win by more than five points. [See Footnote 1.]

In sum, however, all of the forecasts did reasonably well at calling the overall outcome. We can chalk this up to another victory for (most) pollsters and the quants who crunch the data.

1. Addendum: In the original post (text above is unchanged), I argued that Mr. Silver’s economic fundamentals pushed his ND forecast in the wrong direction. This undoubtedly contributed to his inaccuracy in North Dakota, but it wasn’t the main factor. As commenters pointed out, Silver’s model was selective in the polls it used to predict the outcome. As of the last run of the model, Silver’s polling average lined up fairly well with RCP (Berg +5) but not with Pollster (Heitcamp +0.3). Mea Culpa.

Glenn says:

Re: Nate and ND, according to the 538 website, he had the poll average favoring Berg by some 3 points. This appears to be because he did not, in fact, consider all of the same polls. You can criticize him for that, but the claim that his fundamentals overrode the poll average is mistaken, at least based on what is currently up on the 538 website.

Matt W says:

1) Silver would say this result is unsurprising. (In fact, I’m pretty sure he said exactly that at one point.) You’re reducing uncertainty by adding more data.

2) Interesting, looking at all those graphs, that in general all of the models underestimated Democratic performance, with only a couple exceptions (e.g. Nebraska, West Virginia.)

chris says:

A request for clarification regarding the ND senate polling. RCP lists its last polling average as Berg+5.7. I can also see that RCP, 538, RCP, and TPM do not include the same set of polls in their averages. The pollsters that show the favorable results for Heidi Heitkamp are not included in RCP or 538, according the versions of their pages currently available. Nate’s “state fundamentals” move his prediction in the wrong direction, but it is the choice of polls rather than his adjustments that cause the predicted winner to be wrong. Again, this is all according to the versions of the information currently available, not archived versions of what they posted on election day.

Brice D. L. Acree says:

Ah, the commenters are correct vis-à-vis Silver’s fundamentals and poll selections. I’ll append a note to the post.

Melisa Daugherty says:

Thanks to data compiled by Kevin Collins at Princeton, we can examine the accuracy of some of the state-level forecasting models. Nate Silver’s 538 model performs marginally better than the pack. But the best predictor: an average of the forecasts.