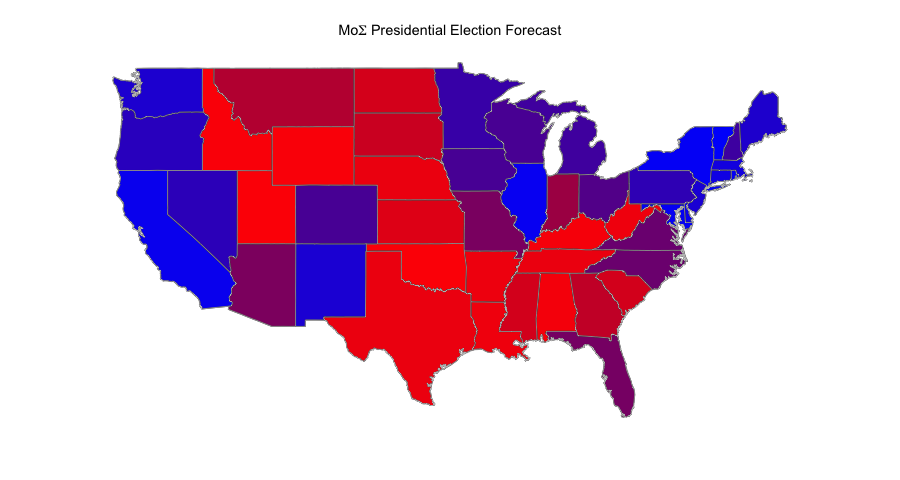

As we published yesterday, the Margin of Error forecast predicts Mr. Obama to secure reelection with 303 electoral votes to Mr. Romney’s 235. This translates roughly into a 68 percent chance for Mr. Obama to win, leaving a nonnegligible 32 percent chance of an upset victory by Mr. Romney.

One of the more interesting elements of the model is that it’s agnostic to state-level polling. Most of the highly-trafficked forecasting models (the gold standard is Nate Silver’s 538 model) use various methodologies for aggregating state- and national-level polling.

The MoE forecast, on the other hand, uses very few variables. The national popular vote is predicted using late-season approval data; the state-level votes are forecast using (a) previous election results; (b) August-November change in unemployment; (c) home state advantage; and (d) a regional dummy variable. No polls.

Despite the stark difference in methodology, the forecast comes in well in-line with most quantitative models. The most notable gap between our model and many of the poll-aggregation models is, in fact, that ours makes a far more conservative prediction of uncertainty.

So, what’s the deal with this fundamentals-based model, anyway? In my opinion, a fundamentals-based forecast brings some distinct advantages and disadvantages.

For starters, fundamentals-based forecasts aren’t terribly exciting. We published the model over the summer, and made only a couple updates since then (updating change in unemployment, once those numbers were released, for example). There’s just not much else to do — and thus nothing exciting really happens over the course of the campaign. We have an insatiable hunger for the latest campaign dynamics, and a fundamentals-based model just can’t deliver.

On a more serious note, fundamentals models generally cannot incorporate quirky election-year events or conditions. Consider the case of Ohio: the auto bailout seems to have significantly affected the race there. But when we built the model this summer, there really wasn’t an ex ante reason to believe this to be the case. As a result, there’s no variable in the model for states with large auto industries, and our forecast of Ohio may lean more toward Mr. Romney than it otherwise should1.

In a way, though, this criticism is also a strength of the model. First, it’s easy to see effects where they do not exist. You could go slaphappy reading the pundits and the myriad campaign events or factors that will supposedly the outcome. But even setting aside potential model identification issues, who really wants dozens of event/factor dummy variables in the model?

Second, on a methodological front, it proves difficult to estimate the size of an effect of something like the auto bailout using historical data since — well, it’s unique to this race. Especially if you’re trying to stay away from polling numbers to present a clean, fundamentals-based election model, you’d essentially have to guess at how much to adjust Ohio (and Michigan) to account for the bailout. Not too scientific.

Third, from the social-scientific perspective, using the fundamentals gives us a kind of baseline. Andrew Gelman posted a must-read entry yesterday on this point. All election forecasting models use a “default” on which to build the model; this could be the last election, averages over last elections, or a fundamentals-style forecast.

But the baseline is useful for reasons other than serving as a foundation for more prediction. It also helps us spot “effects” — like the potential effect of the auto bailout in Ohio, or possible effects from Sandy.

In the post-game analysis, many people will claim to explain away how Mr. Obama or Mr. Romney won the states they won. The fundamentals-based models give us a reference point. Should Mr. Romney lose Michigan — which the model predicts to happen 69 percent of the time — will it have happened because he wanted to let Detroit go bankrupt? Probably not — a simpler explanation based only on economic fundamentals and prior voting history would forecast him to lose the state whether or not he had ever penned that Op/Ed.

In sum, fundamentals-based models are useful if not always exciting. They shouldn’t replace polling aggregators. Those are, on the whole, quite accurate and a lot more fun. But the fundamentals-based models can give you a broad view of the electoral landscape and help to ground your electoral expectations.

Notes:

1. Some might argue that you don’t need an ex ante reason to include the auto bailout or similar types of predictors. Here’s the problem: you would only know to include that type of variable if you were concerned that your model wasn’t lining up with the polls. But coercing the model into fitting polling data is just silly: if you want a polling model, use polling data! But if you want to put together a fundamentals-based model, come up with a reasonable (i.e. powerful but not overfit) model and stick with it.