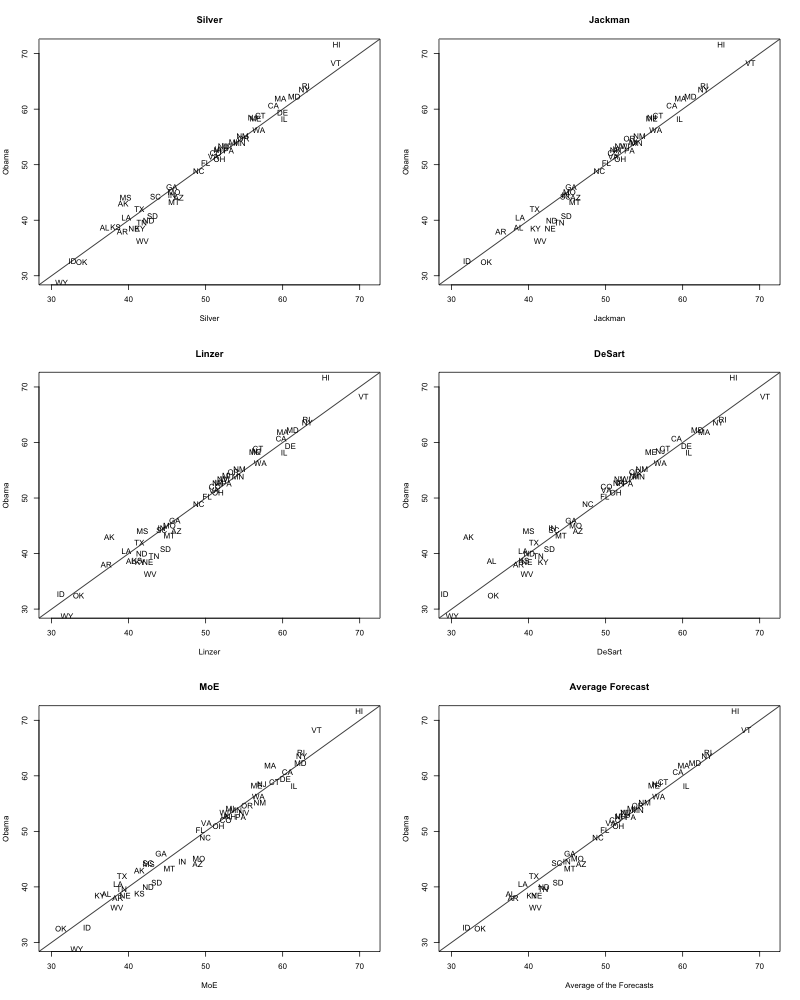

Thanks to compiled by Kevin Collins at Princeton, we can examine the accuracy of some of the state-level forecasting models. Nate Silver’s 538 model performs marginally better than the pack. But the best predictor: an average of the forecasts.

To assess accuracy, we calculate the Root Mean Squared Error. To do so, we take the actual result in state i,  , and a forecaster’s prediction in state i,

, and a forecaster’s prediction in state i,  , and calculate:

, and calculate:

As you can see, higher values indicate that the forecaster made bigger errors. Put another way, the number shows us how badly each forecaster missed on average.

Alex Jakulin, a statistician at Columbia, helpfully pointed out that a more useful metric may be the RMSE weighted by the importance of each state. We would expect misses to be larger in small states and should correct for that. Accordingly, we present the RMSE for each forecaster, and the RMSE weighted by the proportion of electoral votes controlled by each state.

| Forecast | RMSE | Wtd RMSE |

| Silver 538 | 1.93 | 1.60 |

| Linzer Votamatic | 2.21 | 1.63 |

| Jackman Pollster | 2.15 | 1.71 |

| DeSart / Holbrook | 2.40 | 1.79 |

| Margin of Σrror | 2.15 | 1.98 |

| Average Forecast | 1.67 | 1.37 |

All told, the forecasts did quite well. But look at what worked better: averaging over the forecasts. This makes good statistical sense: as Alex points out with a fun Netflix example, it makes more sense to keep as much information as possible. In a Bayesian framework, why pick just one “most probable” parameter estimate, instead of averaging over all possible parameter settings, with each weighted by its predictive ability?

During the Republican primaries, Harry Enten published a series of stories on this blog doing precisely that; and then, as now, the “aggregate of the aggregates” performed better than any individual prediction on its own.

On the whole, all the forecasting models did quite well. As the figure above shows, critics of these election forecasts ended up looking pretty foolish.

I only now wonder if the 2016 will see a profusion of aggregate aggregators; and if so, how much grief Jennifer Rubin will give them.

Tom says:

Surely cell K43 on that spreadsheet must be wrong.

Brice D. L. Acree says:

Tom,

Yes, some of the data showed Romney’s vote instead of Obama’s. I went through and fixed those prior to computing the RMSE. My earlier numbers had included some probs with data, as well as some old data. I’ll post the data I used.

Chris says:

Any reason that your RMSE analysis did not include Princeton’s Sam Wang? Could you add that to the chart?

Brice D. L. Acree says:

Chris,

I checked Prof. Wang’s forecasts, but couldn’t find the state-by-state predictions. I think Kevin Collins got his directly. I can e-mail Prof. Wang to get his numbers.

gwern says:

I actually pinged Wang on Twitter last night as part of collecting a larger dataset of predictions, but he hasn’t gotten back to me AFAIK, so don’t necessarily expect a reply soon.

(I was collecting all the overall presidential stats, per-state margins, per-state victory %, Senate margins, and Senate victory %, for Silver, Linzer, DeSart, Intrade, Margin of Error, Wang, Putnam, Jackman, and Unskewed Polls, not that I got all of them but the Brier scores and RMSE are still interesting to look at.)

gwern says:

My results so far if anyone is interesting in those Brier/RMSEs:

(It was for this blog post: http://appliedrationality.org/2012/11/09/was-nate-silver-the-most-accurate-2012-election-pundit/ )

Brian says:

Wang’s state by state forecasts are found in the right-hand column of his site under “The Power of Your Vote”. He doesn’t list every state but he does show every swing state.

Luke Muehlhauser says:

Additional Brier score and RMSE score comparisons here:

http://appliedrationality.org/2012/11/09/was-nate-silver-the-most-accurate-2012-election-pundit/

Some Body says:

Isn’t it a bit too early, though? We don’t yet have the final results, and won’t have for a couple of weeks (Ohio counting provisional ballots on the 19th and all).

Brice D. L. Acree says:

Brody,

It’s never too early! But more to your point… yeah, we will update with final results. My über-sophisticated forecast: these RMSEs won’t change much.

Brash Equilibrium says:

I describe some possible methods for calculating model averaging weights from Brier scores and RMSEs at my blog. Check it out. Maybe Margin of Error will see if a weight average model does -even better- than a simple average?

http://www.malarkometer.org/1/post/2012/11/some-modest-proposals-for-weighting-election-prediction-models-when-model-averaging.html#.UJ2kBeQ0WSo

Paul C says:

Yes its too early. http://www.huffingtonpost.com/2012/11/08/best-pollster-2012_n_2095211.html?utm_hp_ref=@pollster

Pingback/Trackback

Final Result: Obama 332, Romney 206 | VOTAMATIC

Paul C says:

Sam Wang computes his own Brier score here with others: http://election.princeton.edu/2012/11/09/karl-rove-eats-a-bug/#more-8830

fLoreign says:

Yeah dude, do make aggregators of poll aggregators four years from now. I’ve seen the collapse of mortgage derivatives; will the election forecast business be the next bubble? Like, fifty statisticians for each survey interview operator, so that the world of forecasts could create its own noise?

I’m betting on popcorn.

Brash Equilibrium says:

Here’s a funny short story I just wrote about an election prediction bubble. Enjoy.

“Aggregatio ad absurdum”

Arnold Evans says:

What happens if you calculate Brier scores and RMSE’s only of swing states and close (somehow defined) races?

In some sense, being right about VA, FL and OH should matter more than CA, NY and TX. I don’t care who’s the most accurate in a lot of races so I would like to know whose scores are best in the races I and most people actually cared about.

Pingback/Trackback

More accurate than Nate Silver or Markos—and simple, too | The Penn Ave Post

Pingback/Trackback

More accurate than Nate Silver or Markos—and simple, too | FavStocks

Pingback/Trackback

Evaluating Election Forecasts | Robust Analysis

Pingback/Trackback

Forecasting Round-Up No. 2 « Dart-Throwing Chimp

Pingback/Trackback

Homepage